import bidon

def baz():

bidon.bidon()

def bar():

baz()

def foo():

bar()

if __name__=='__main__':

foo()

python:call: { cpu_id = 7 }, { co_name = "" }

python:call: { cpu_id = 7 }, { co_name = "foo" }

python:call: { cpu_id = 7 }, { co_name = "bar" }

python:call: { cpu_id = 7 }, { co_name = "baz" }

python:return: { cpu_id = 7 }, { }

python:return: { cpu_id = 7 }, { }

python:return: { cpu_id = 7 }, { }

python:return: { cpu_id = 7 }, { }

from distutils.core import setup

from Cython.Build import cythonize

from distutils.extension import Extension

ext = [

Extension('foo1', ['foo1.pyx'],

extra_compile_args = ['-g'],

),

Extension('foo2', ['foo2.pyx'],

extra_compile_args = ['-finstrument-functions', '-g'],

),

]

setup(

ext_modules = cythonize(ext),

)

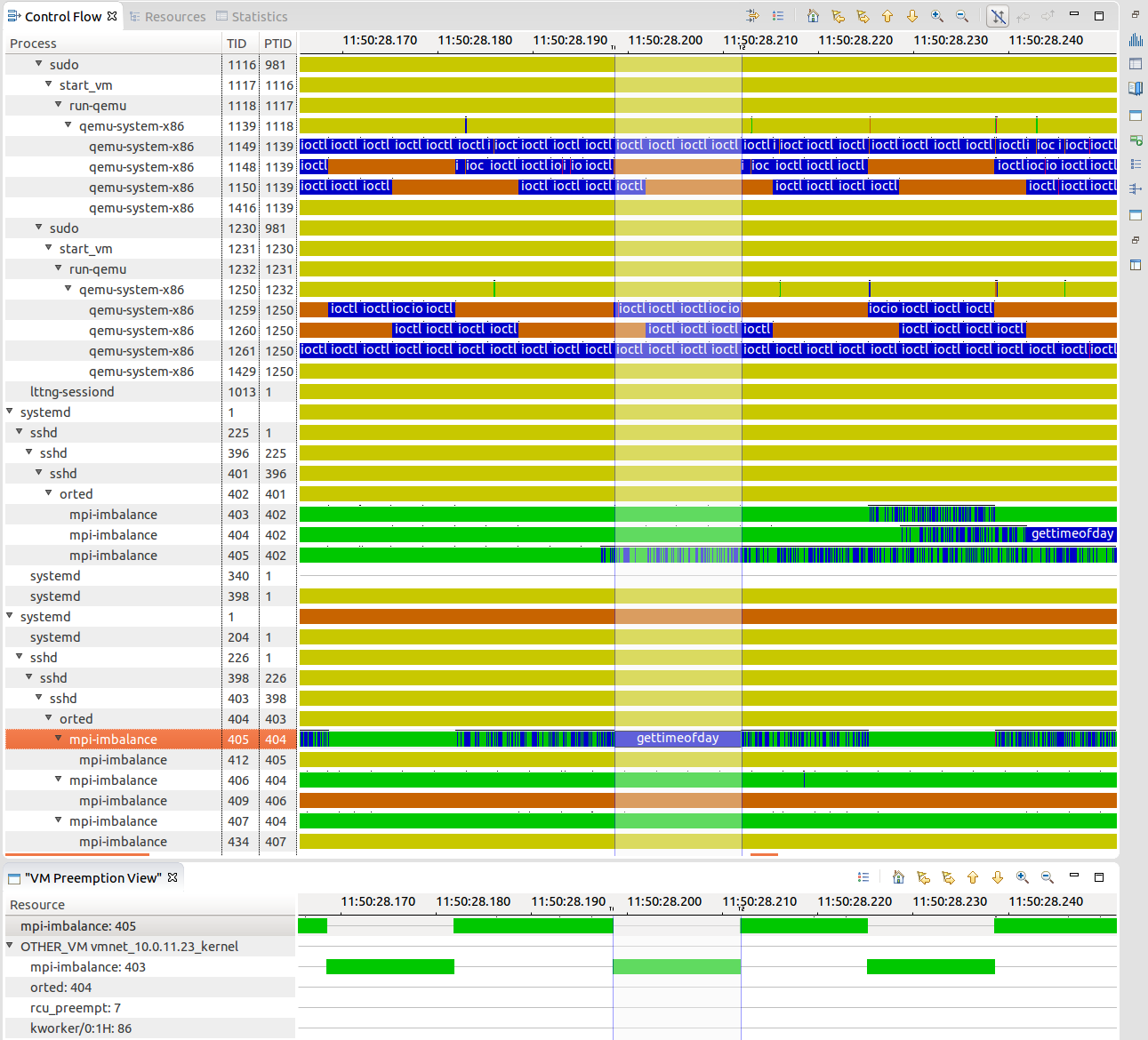

And then I invoked both modules foo1.foo() and foo2.foo(), with the lttng-ust-cyg-profile instrumentation, that hooks on the GCC instrumentation. I also enabled kernel tracing, just for fun. Both traces (kernel and user space) are loaded in an Trace Compass experiment.

We see in the screenshot below the users space stack according to time for the foo1 and foo2 executions. In the first execution, we see the entry point of inside foo1.so and the invocation of Python code from the library, but function calls inside foo1.so are not visible. In the second execution, both the Python and the GCC instrumentation are available, and the complete callstack is visible. The higlighted time range is about 6us and corresponds to the write() system call related to the print() on the console. We can see the system call duration and the blocking in the control flow view.

Unfortunately, symbols can't be resolved, because base address library loading can't be enabled at this time with Python instrumentation, due to this bug.

This was an example how three sources of events finally create a complete picture of the execution!

EDIT:

A Cython module can call profile hook of the main interpreter. Call cython with the profile option, and compile the resulting code with -DCYTHON_TRACE=1. This can be done in setuptools with:

cythonize(ext_trace, compiler_directives={'profile': True}),

Thanks to Stefan Behnel for this tip!